What Are Unified API Platforms

Unified API platforms provide a single, standardized interface to access multiple third-party APIs within specific software categories, acting as abstraction layers that simplify integrations by standardizing data models, authentication flows, and endpoints across different providers.

These platforms eliminate the need for developers to build separate integrations for each service, significantly reducing development time and maintenance costs while improving scalability. For comprehensive AI language models information, see our complete guide. Compared to direct API integrations, unified platforms offer faster time to market and consistent developer experiences.

How Unified API Platforms Work

Unified API platforms act as abstraction layers between developers and multiple service providers, translating different provider-specific implementations into standardized interfaces. They handle data normalization and authentication management.

These platforms offer standardized operations for common actions across all integrated services, allowing developers to interact with a single, well-documented API. The platform vendor handles ongoing maintenance and updates as source APIs change.

Best Unified API Platforms 2026

1. OpenRouter: Universal LLM Interface

OpenRouter provides a unified interface for accessing major language models from OpenAI, Anthropic, Google, and 60+ providers through a single API. It offers better prices, improved uptime, and no subscriptions, with automatic fallback to other providers when one goes down. Core features include access to 500+ models, OpenAI SDK compatibility, distributed infrastructure for reliability, edge deployment for minimal latency, and custom data policies for enterprise security. OpenRouter suits scenarios requiring access to multiple LLM providers, cost optimization, high availability, and simplified integration workflows.

2. fal.ai: Generative Media Platform

fal.ai is a generative media platform providing access to 600+ production-ready image, video, audio, and 3D models through a unified API. It offers serverless GPUs with on-demand scaling, fal Inference Engine for up to 10x faster diffusion model inference, and dedicated compute clusters for training workloads. Core features include 600+ generative media models, serverless GPU deployment, fal Inference Engine acceleration, H100/H200/B200 access, and enterprise-grade reliability. fal.ai suits scenarios requiring generative media capabilities, fast inference speeds, scalable infrastructure, and custom model deployment.

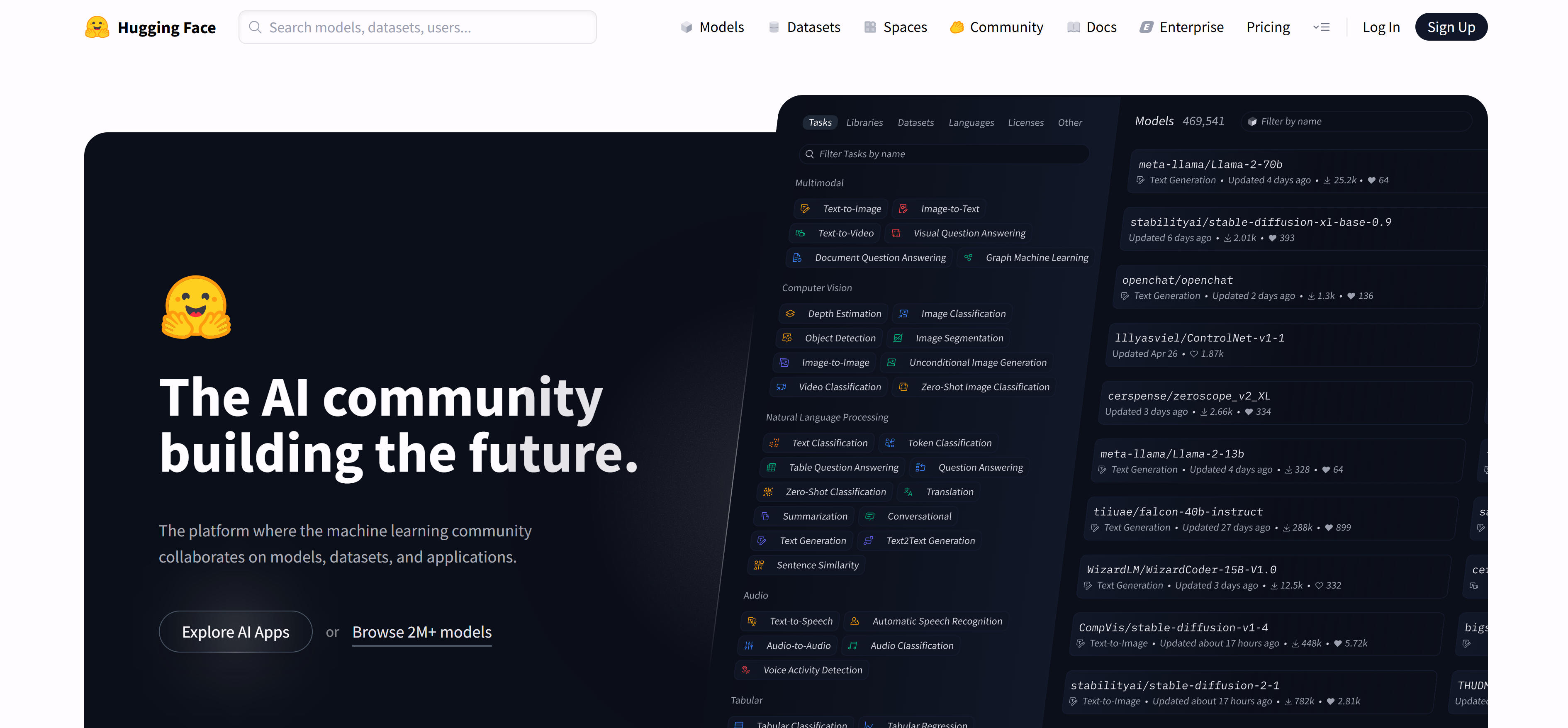

3. Hugging Face: ML Community Hub

Hugging Face is the largest machine learning community platform, providing access to 2M+ models, 500k+ datasets, and 1M+ applications through unified APIs and inference endpoints. It offers Inference Providers for accessing 45,000+ models from leading AI providers with no service fees, optimized Inference Endpoints for deployment, and Spaces for hosting applications. Core features include access to 2M+ models across all modalities, unified API for 45,000+ models, Inference Endpoints for optimized deployment, Spaces for application hosting, and enterprise solutions with security and access controls. Hugging Face suits scenarios requiring access to diverse ML models, community-driven model discovery, optimized inference deployment, and collaborative ML development.

4. Fireworks: Fast Inference Engine

Fireworks provides a fast inference engine for language models, offering optimized performance, low latency, and enterprise-grade reliability. It supports multiple model providers and offers custom model deployment with dedicated infrastructure. Core features include fast inference speeds, low latency optimization, multiple model provider support, custom model deployment, and enterprise security features. Fireworks suits scenarios requiring high-performance inference, low latency requirements, custom model deployment, and enterprise-grade reliability.

5. Vertex AI: Google Cloud Platform

Vertex AI is Google Cloud's unified machine learning platform, providing access to Google's AI models and services through a single interface. It offers AutoML capabilities, custom model training, MLOps tools, and integration with Google Cloud infrastructure. Core features include access to Google AI models, AutoML for automated model development, custom model training and deployment, MLOps tools for production workflows, and seamless Google Cloud integration. Vertex AI suits scenarios requiring Google AI model access, enterprise cloud infrastructure, automated ML workflows, and comprehensive MLOps capabilities.

6. Replicate: Model Deployment Platform

Replicate provides a platform for running machine learning models in the cloud, offering easy deployment, automatic scaling, and pay-per-use pricing. It hosts thousands of pre-trained models and allows users to deploy custom models with minimal configuration. Core features include access to thousands of pre-trained models, easy model deployment, automatic scaling, pay-per-use pricing, and API access for integration. Replicate suits scenarios requiring quick model deployment, pay-per-use pricing models, automatic scaling, and minimal infrastructure management.

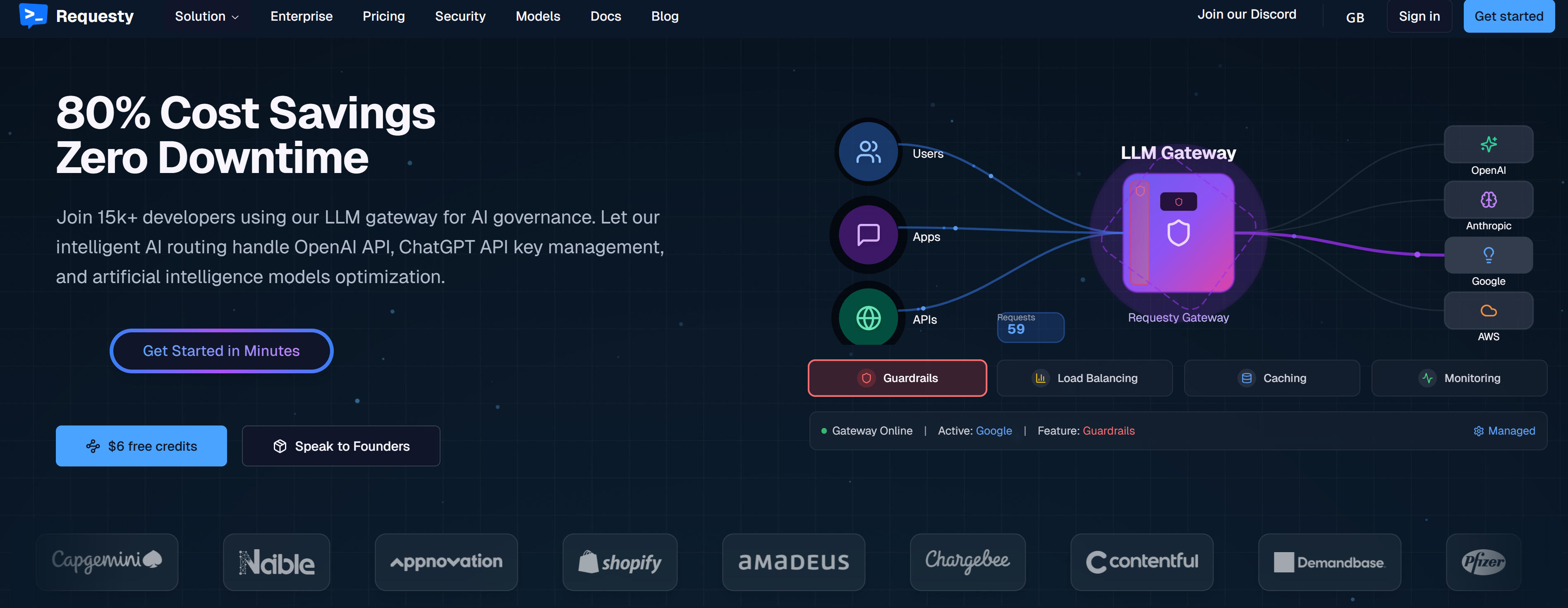

7. Requesty: Enterprise API Gateway

Requesty provides an enterprise API gateway for unified access to multiple APIs, offering request routing, rate limiting, authentication management, and monitoring capabilities. It simplifies API integration workflows and provides enterprise-grade security and reliability. Core features include unified API access, request routing and load balancing, rate limiting and throttling, authentication management, and comprehensive monitoring and analytics. Requesty suits enterprise scenarios requiring unified API access, enterprise-grade security, comprehensive monitoring, and simplified API management.

8. AWS Bedrock: Amazon AI Services

AWS Bedrock provides access to foundation models from leading AI companies through an API, offering the broadest choice of foundation models along with the deepest set of capabilities to build generative AI applications with security, privacy, and responsible AI. Core features include access to foundation models, model customization with fine-tuning, retrieval-augmented generation (RAG), agents for complex tasks, and seamless AWS integration. AWS Bedrock suits scenarios requiring foundation model access, AWS infrastructure integration, model fine-tuning capabilities, and enterprise-grade security and compliance.

Comparison

Below is a detailed comparison of leading unified API platforms to help you quickly understand features, use cases, and suitability:

Use Cases

Multi-Model Access

Developers use platforms like OpenRouter and Hugging Face to access multiple models from different providers through unified APIs, enabling flexible model selection and switching without managing separate integrations. These platforms eliminate the need to build and maintain custom integrations for each provider, significantly reducing development time and complexity.

Cost Optimization

Platforms like OpenRouter offer better pricing across providers, allowing developers to optimize costs by selecting the most cost-effective models for specific use cases while maintaining consistent API interfaces. This pricing flexibility enables applications to scale cost-effectively without vendor lock-in.

Reliability and Uptime

Unified platforms provide automatic fallback mechanisms, ensuring high availability when individual providers experience downtime. Platforms like OpenRouter offer automatic failover, while fal.ai provides 99.99% uptime guarantees, improving overall system reliability and user experience.

Developer Experience

Platforms standardize authentication, data models, and endpoints, providing consistent developer experiences and reducing learning curves compared to managing multiple provider-specific APIs. OpenRouter offers OpenAI SDK compatibility, while Hugging Face provides unified APIs for 45,000+ models.

Enterprise Integration

Enterprise platforms like Requesty and AWS Bedrock provide security, compliance, and monitoring capabilities required for large-scale deployments and organizational use cases. These platforms integrate seamlessly with existing infrastructure, enabling organizations to leverage AI capabilities within their security frameworks.

How to Choose

1. Evaluate Model Coverage and Variety

Evaluate platform model coverage for your use cases. OpenRouter excels for LLM access, fal.ai for generative media, Hugging Face for diverse ML models. Ensure platforms support models matching your requirements.

2. Compare Pricing Structures

Compare pricing models across platforms. OpenRouter offers competitive pricing with no subscriptions, Replicate uses pay-per-use models. Consider usage patterns and cost optimization opportunities.

3. Assess Performance and Latency

Assess performance requirements. fal.ai provides up to 10x faster inference, Fireworks focuses on low latency, OpenRouter uses edge deployment for minimal latency between users and inference.

4. Review Reliability and Support

Evaluate uptime guarantees and support options. OpenRouter provides automatic fallback, fal.ai offers 99.99% uptime, enterprise platforms like AWS Bedrock provide comprehensive support and SLAs.

5. Consider Integration Complexity

Consider integration requirements. OpenRouter offers OpenAI SDK compatibility, Hugging Face provides unified APIs, Vertex AI and AWS Bedrock integrate seamlessly with cloud ecosystems.

Conclusion

Unified API platforms revolutionize AI integration by providing single interfaces to access multiple models and services. OpenRouter leads for LLM access with universal interface and competitive pricing, fal.ai excels for generative media with fast inference, and Hugging Face offers the largest ML model collection.

Choose platforms matching your specific needs: model coverage, pricing optimization, performance requirements, reliability guarantees, and integration complexity. These platforms eliminate integration overhead, reduce maintenance costs, and enable faster time to market for AI-powered applications.